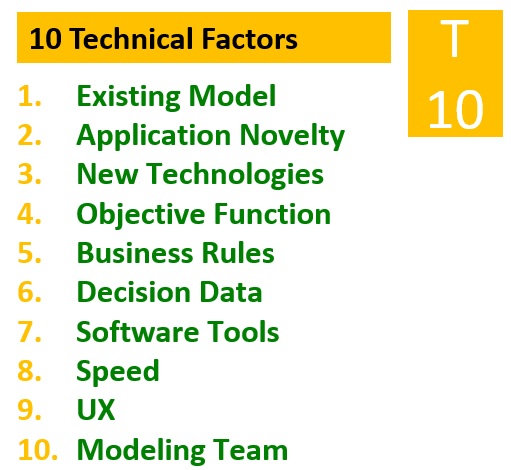

We employ a framework of 20 scorable risk factors, the “Princeton 20,” to manage our optimization projects for deployment into production. There are 10 environmental and 10 technical factors. In the previous post, I addressed the environmental risks. Following are the technical risks.

1. Existing model. It is easy to show we have a better mousetrap if the client already has one and it is well documented. Most often, we build a model to improve or replace a model that is used often but not fully. It is high risk when the scope is to create a model where none exists.

Select mitigators: Insist that the model UX completely capture user behavior; focus on user overrides and timing gaps in usage; focus on improved robustness to data failure.

2. Application novelty. We have engagements where the client does not have a model but, in its industry, it is well understood that for this application models are used regularly. It can be the case when the model is viewed as something that has not been done before. We do not counsel you to avoid innovators; we encourage you to understand and manage the related high risk.

Select mitigators: decompose the decision process into micro decisions and start with a series of small special purpose/situation models; consider broadening the sponsorship to include other business units or thought leaders within the enterprise.

3. New technologies. Envision that a company’s CTO is crazy about a new AI library, gets the free trial, and requires everyone try it. I suggest designing a project so it will not rely wholly on that new technology, which might well be merely vaporware. A commonsensical approach, for those of us who have lived through many battles, is to solve with conventional technology, then see whether the new technology adds value to that baseline.

Select mitigators: Minimal Viable Product (MVP) approach; federate research with non-competitive external groups; rather than focusing on the state-of-the-art, investigate as backup the usage of different maturities/tiers of components.

4. Objective function. For firms like ours concerned with deployment into the field, simply figuring out the optimization is not sufficient. At many of the places we have been, there is a colossal amount of value in rigorously getting the objective function right—it is generally understood, but not completely crisp, and may involve trade-offs between competing KPIs. It is low risk when the client already has the objective function well defined and easily scored; it is high risk when we are tasked to create a numerical objective function for the first time. The natural evolution of our models is to start with a feasible solution and, over time, objectify more input and correspondingly enrich the value of the model.

Select mitigators: thoughtful workshops around objective functions; create simulation technologies—“digital twins”; human-in-the-loop best practices, meaning no black boxes and emphasize UX.

5. Business rules. The more understood and numeric the business rules, the lower risk for an optimizer. Typically for us, the rules are generally static and relaxed in different circumstances. Risk is entailed when we are engaged to create consensus and codify the business rules.

Select mitigators: collect a body of solved “hard cases” in test suite; explore adding machine learning techniques instead of relying 100% on SMEs; a UX that allows users to understand/ change rules on the fly.

6. Decision Data. In many of our projects, the data are captured sufficiently and, going in, we believe the data can be sufficiently cleaned for a first-cut decision. If we need to determine whether the data exists and can be sufficiently cleaned to outperform the status quo, there is risk.

Select mitigators: triage meta algorithms (identify areas where the model works, usually works, and does not work); find exogenous data sources that are more complete to improve the signal; explore the value proposition of anomaly/fraud detection.

7. Software tools. When all the tools are completely in the hands of the modeling team and budget is assured, there is low risk. Typically for us, the tools are largely prescribed, and our team is experienced with them and confident they will be adequate. The evaluation of new tools or products to accomplish some or all of the deployment brings risk.

Select mitigators: early addition of experienced analytics tool architects; abandon tools early if they are impeding progress; loosely coupled, microservices model and data architecture.

8. Speed. I do not mean solve speed the way commercial optimization solvers do—I mean decision speed. The “superpower” of optimization practitioners is that through our models we can solve a lot faster, we can get more combinations than a human or a primitive model can. In our view, the risk is neutral if the clients are not very interested in speeding up the decision process. We see risk when a fast decision is deemed “impossible” because of exogenous data or decision makers.

Select mitigators: include in the Cost Benefit Analysis (CBA) a sensitivity curve; link with in-house strategy or innovation teams to build the case for improving decision speed and a long-term data-driven vision.

9. UX. A powerful UX is not always necessary. Maybe the model just says hire or don't hire, and that's all you need to do. We are accustomed to projects where the UX involves substantial human-in-the-loop cooperation. It is high risk when designing a UX to drive and attract usage from a diverse or optional population.

Select mitigators: staff with designers experienced with optimization UX best practices; design the ideal model UX and make this drive algorithm selection (rather than vice versa).

10. Modeling team. In our typical projects, there are client executives with successful production deployment experience in business areas other than the one we are working in. Risk is entailed when the client team has successful proofs-of-concept in place and limited experience with broad production deployment at the intended scale.

Select mitigators: review/audit the model for robustness; blend the team with sources that have contingent resource depth; emphasize model robustness and usage over maximizing the solution quality.

To conclude, the Princeton 20 framework captures and systematizes our learnings. It helps us score a project ahead of time, come up with explicit mitigators, and conduct post-project reviews to see how successfully we managed risks and problems.

Princeton Consultants offers an assessment of an optimization project’s risk factors and recommendations to mitigate them. Contact us to learn more and discuss how this approach could help your organization.